In augmented intelligence, a common business problem is how to make a machine or robot move around and perform tasks. For this purpose, the machine or robot needs to have an idea of its location in the environment that it is in. This problem is resolved by using simultaneous localization and mapping (SLAM). SLAM is a computational problem for constructing a map of an unknown area while still knowing where the machine or robot exists in the map.

There are various algorithms done to tackle this problem. But in a general sense, a sensor such as LiDAR or camera is used and data from these sensors are stitched together to form a map known as mapping process. The same sensor afterward can be used to figure out where it is on the map by performing a suitable matching algorithm know as localization. After performing the two, the machine or robot will have a sense of where it is in a local space. In this particular instance, because we are using a camera to perform SLAM, it is known as Visual SLAM or VSLAM.

Once the machine or robot has knowledge of its location, navigation and other tasks become accessible. Like SLAM, navigation has many different algorithms for path planning. By choosing from a range of algorithms for following waypoints to others like A*, the machine can move around the map.

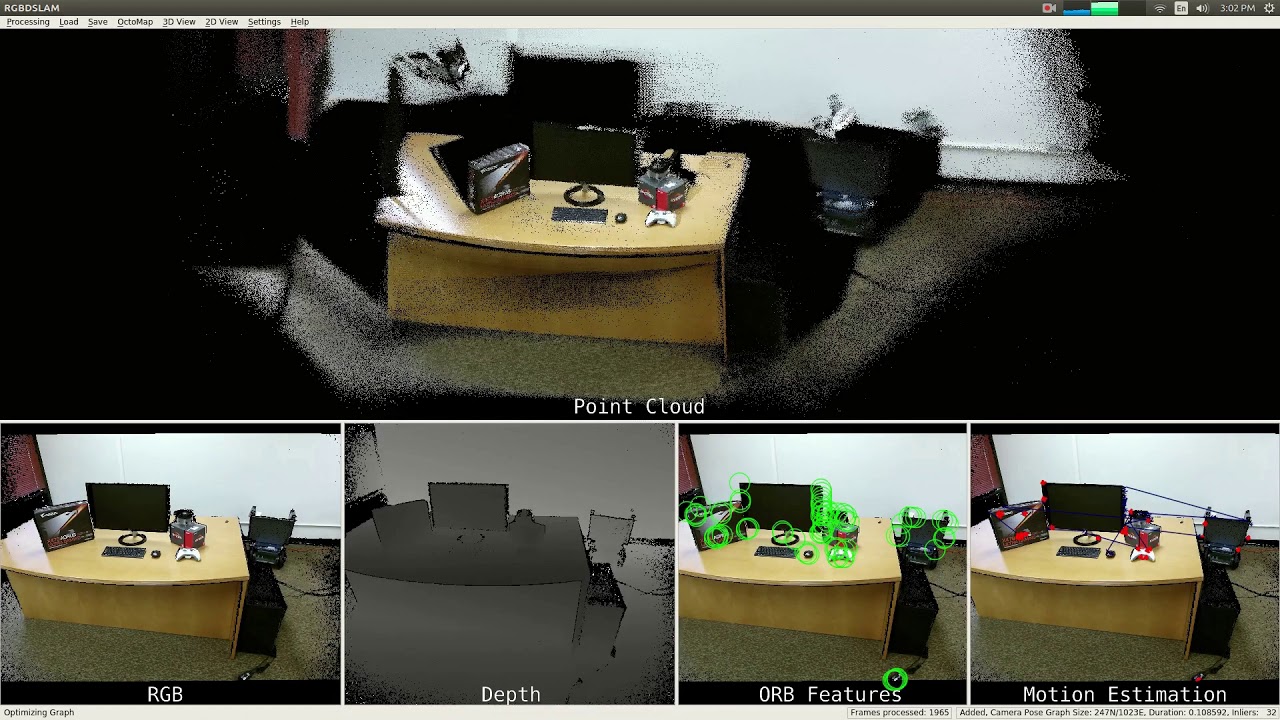

We have chosen one of the open source SLAM algorithms and applied it for creating a map used in many augmented intelligence applications. By using one RGB-Depth camera, it is capable of creating a dense pointcloud of an area. This pointcloud can be used in various applications such as surveillance robots, exploration of unknown places, and terrain mapping.

By applying Radeon Open Compute(ROCm) and AMD MIVisionX, we delivered this framework to effectively use OpenVX for feature detection and OpenCL for GPU acceleration.

Applications:

- Creating 3D models of real space

- Creating 3D models for virtual reality

- Creating 3D models of game environments

- Perception for machine, robot, and drone applications